Visual Language Maps For Robot Navigation Visual Language Maps for Robot Navigation Abstract Grounding language to the visual observations of a navigating agent can be performed using off the shelf visual language models pretrained on Internet scale data e g image captions While this is useful for matching images to natural language descriptions of object goals it remains

Visual Language Maps for Robot Navigation Chenguang Huang Oier Mees Andy Zeng Wolfram Burgard We present VLMAPs Visual Language Maps a spatial map representation in which pretrained visuallanguage model features are fused into a 3D reconstruction of the physical world Spatially anchoring visual language features enables natural language indexing in the map which can be used to e g While interacting in the world is a multi sensory experience many robots continue to predominantly rely on visual perception to map and navigate in their environments In this work we propose Audio Visual Language Maps AVLMaps a unified 3D spatial map representation for storing cross modal information from audio visual and language cues AVLMaps integrate the open vocabulary

Visual Language Maps For Robot Navigation

Visual Language Maps For Robot Navigation

https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEg2VsWhct6XVfLa_TIsoRpJ-5Oj2qJ4c0bplv_sdRwUT0cd24_4bHzGmQ8sNCsrXMPvjx-YI7_swqVCMHIr0Yxlo-OaLVIqyeJxj539zAeT-472yNkMhA2WFPpqymuqh7CVQFJqmdrwnz_XhRJA_8i5zmNvC1YWHB7GiGhrgNhEQG74suvv4hx_7FZKEQ/s1639/image2.png

Autonomous Robot Navigation Technology In Human Populated Environments

https://innovation.ox.ac.uk/wp-content/uploads/2021/04/iStock-1273518964-scaled.jpg

Robot Navigation Beginners Class YouTube

https://i.ytimg.com/vi/I6NBnulYKKw/maxresdefault.jpg

VLMaps which is set to appear at ICRA 2023 is a simple approach that allows robots to 1 index visual landmarks in the map using natural language descriptions 2 employ Code as Policies to navigate to spatial goals such as go in between the sofa and TV or move three meters to the right of the chair and 3 generate open vocabulary Project page for Visual Language Maps for Robot Navigation Visual Language Maps for Robot Navigation Chenguang Huang 1 Oier Mees 1 Andy Zeng 2 Wolfram Burgard 3 1 Freiburg University 2 Google Research 3 University of Technology Nuremberg Paper Video Code CoLab Google AI Blog VLMaps enables spatial goal navigation with language

VLMaps is a spatial map representation that directly fuses pretrained visual language features with a 3D reconstruction of the physical world and can be shared among multiple robots with different embodiments to generate new obstacle maps on the fly Grounding language to the visual observations of a navigating agent can be performed using off the shelf visual language models pretrained on AVLMaps provide an open vocabulary 3D map representation for storing cross modal information from audio visual and language cues When combined with large language models AVLMaps consume multimodal prompts from audio vision and language to solve zero shot spatial goal navigation by effectively leveraging complementary information sources to disambiguate goals

More picture related to Visual Language Maps For Robot Navigation

Mapping For Mobile Robots And UGV Video MATLAB

https://www.mathworks.com/content/dam/mathworks/videos/m/mapping-for-mobile-robots-and-ugv.mp4/jcr:content/renditions/mapping-for-mobile-robots-and-ugv-thumbnail.jpg

Integrating Topological And Metric Maps For Mobile Robot Navigation A

https://data.docslib.org/img/2148237/integrating-topological-and-metric-maps-for-mobile-robot-navigation-a-statistical-approach.jpg

GitHub TusharSrivastav 27 Mapping And Navigation Of A Robot Using

https://user-images.githubusercontent.com/71928146/125332493-b9e69d80-e366-11eb-9b25-f4f65cfb1ac1.png

To address this problem we propose VLMaps a spatial map representation that directly fuses pretrained visual language features with a 3D reconstruction of the physical world VLMaps can be autonomously built from video feed on robots using standard exploration approaches and enables natural language indexing of the map without additional In this work we propose Audio Visual Language Maps AVLMaps a unified 3D spatial map representation for storing cross modal information from audio visual and language cues AVLMaps integrate the open vocabulary capabilities of multimodal foundation models pre trained on Internet scale data by fusing their features into a centralized 3D

[desc-10] [desc-11]

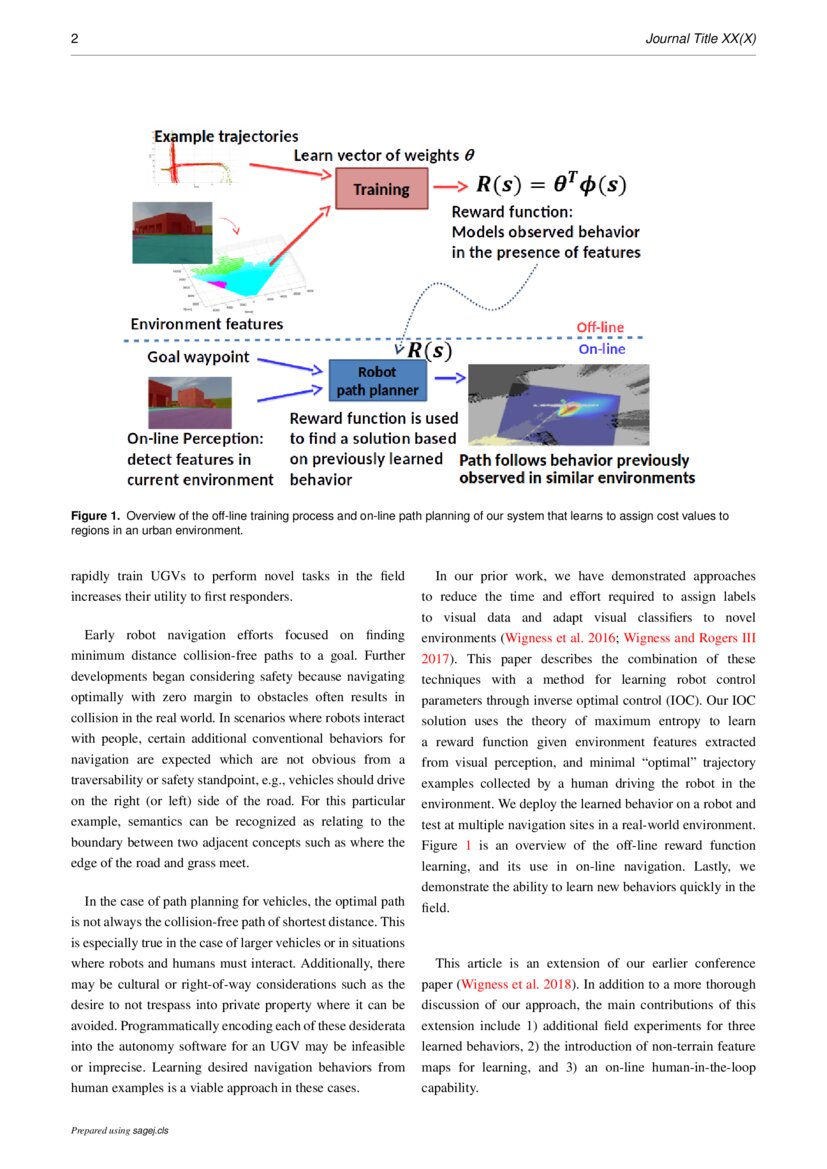

Robot Navigation From Human Demonstration Learning Control Behaviors

https://images.deepai.org/publication-preview/robot-navigation-from-human-demonstration-learning-control-behaviors-with-environment-feature-maps-page-2-medium.jpg

Probabilistic And Deep Learning Techniques For Robot Navigation And

https://i.ytimg.com/vi/ppc7uRQqti4/maxresdefault.jpg

Visual Language Maps For Robot Navigation - VLMaps which is set to appear at ICRA 2023 is a simple approach that allows robots to 1 index visual landmarks in the map using natural language descriptions 2 employ Code as Policies to navigate to spatial goals such as go in between the sofa and TV or move three meters to the right of the chair and 3 generate open vocabulary